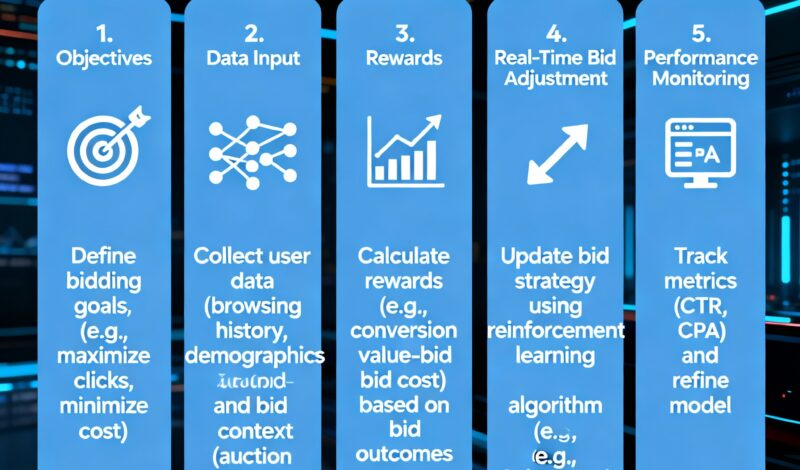

Understanding Reinforcement Learning in Ads

AI ad bidding using reinforcement learning (RL) is a powerful approach where algorithms learn by trial and error to achieve optimal campaign results. In this method, RL systems continuously test different bid amounts and targeting strategies, receiving feedback based on performance metrics such as clicks, conversions, and ROAS. Unlike static rules or manual adjustments, reinforcement learning adapts automatically, identifying which actions deliver the highest return on ad spend.

Define Campaign Objectives

Before implementing RL, set clear goals:

- Maximize conversions or revenue

- Optimize for cost per acquisition (CPA)

- Target high-value users efficiently

- Increase customer lifetime value

Clear objectives allow the RL model to understand what “success” looks like and make optimal bidding decisions.

Feed the Model with Data

The RL system needs data inputs such as:

- Historical bid and spend data

- User behavior and engagement metrics

- Conversion tracking across channels

- Audience segmentation and device information

The more high-quality data fed into the system, the faster and more accurate it becomes at predicting optimal bids.

Set Rewards and Penalties

RL works on a reward-based system:

- Reward: High conversions, clicks, or sales from a bid

- Penalty: Wasted spend or low engagement

The AI continuously adjusts bids to maximize rewards while minimizing penalties, creating an evolving strategy that improves over time.

Real-Time Bid Adjustment

RL allows campaigns to adjust bids in real time based on user behavior, device type, location, and time of day:

- Increase bids for high-value audiences likely to convert

- Decrease bids for low-probability users

- Shift budgets dynamically across channels to maximize ROI

This adaptability ensures every ad dollar works efficiently, even in fluctuating markets.

Monitor Performance & Refine

Even though RL automates bidding, human oversight is crucial:

- Track KPIs such as CPA, CTR, and ROAS

- Compare RL-driven campaigns against historical performance

- Adjust reward functions if business goals change

Continuous monitoring ensures alignment with strategic objectives.

Real-World Example: E-Commerce Retailer

A major e-commerce brand tested reinforcement learning for Google Shopping campaigns:

- RL adjusted bids across thousands of products automatically

- High-value users received higher bids during peak purchase hours

- After 3 months:

- ROAS improved by 35%

- CPA decreased by 22%

- Campaigns became self-optimizing with minimal manual input

- ROAS improved by 35%

The result: faster, smarter, and more profitable campaigns than traditional manual bidding.

Tools Supporting RL in Ad Bidding

- Google Smart Bidding – Uses RL concepts for target ROAS and CPA optimization.

- Meta Advantage+ Bidding – Continuously adjusts bids based on predicted conversion value.

- Albert AI – Fully autonomous RL-based cross-channel bidding.

- Adobe Sensei – Integrates RL algorithms to optimize programmatic campaigns.

These tools reduce manual effort while improving precision and ROI.

Challenges and Best Practices

- Data Requirements: RL needs high-quality historical data for fast learning.

- Complexity: Setting correct reward functions is critical; misalignment can reduce performance.

- Monitoring: Regular oversight ensures campaigns remain on track and aligned with business goals.

Best Practice: Start with high-value campaigns and scale RL gradually to ensure accuracy and control.

Key Takeaways

Reinforcement learning in AI ad bidding offers a revolutionary approach:

- Adaptive: Bids evolve automatically based on real-time performance

- Efficient: Budget is allocated dynamically to maximize ROI

- Scalable: Works across channels and campaigns without constant manual input

Brands adopting RL in bidding strategies gain smarter campaigns, faster optimization, and measurable growth, making it a critical tool for modern performance marketing.